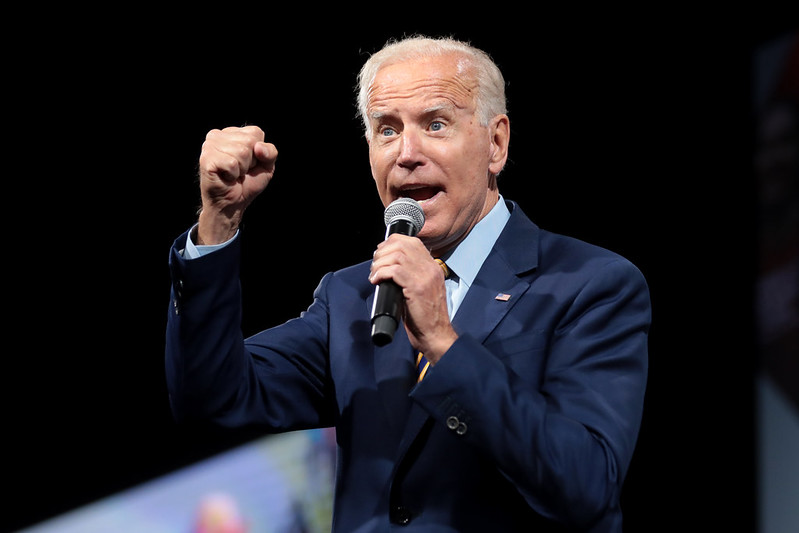

If elected, former Vice President and current Democratic presidential candidate Joe Biden promised to “revoke immediately” the 1996 provision that gave tech companies like Facebook protection from civil liability for harmful or misleading content published on their platforms. The Stigler Center Committee on Digital Platforms has a proposal to fix the problem.

In a surprising statement, former Vice President and current Democratic presidential candidate Joe Biden announced that, if elected president, he’d seek to repeal one of the most crucial pieces of legislation related to digital platforms, Section 230 of the Communications Decency Act. According to this 1996 provision, “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” Biden, who has personally clashed with Facebook to have a defamatory political ad removed, said he wants to make social networks more accountable for the content they host, the way newspapers already are.

“[The New York Times] can’t write something you know to be false and be exempt from being sued. But [Mark Zuckerberg] can. The idea that it’s a tech company is that Section 230 should be revoked, immediately should be revoked, number one. For Zuckerberg and other platforms,” Biden said in an interview with The New York Times.

“If there’s proven harm that Facebook has done, should someone like Mark Zuckerberg be submitted to criminal penalties, perhaps?” The Times’ editors asked Biden, who replied: “He should be submitted to civil liability and his company to civil liability, just like you would be here at The New York Times.”

Structural reform of Section 230 is also one of the policy proposals made by the recent Stigler Center Committee on Digital Platforms’ final report. The independent and non-partisan Committee—composed of more than 30 highly-respected academics, policymakers, and experts—spent over a year studying in-depth how digital platforms such as Google and Facebook impact the economy and antitrust laws, data protection, the political system, and the news media industry.

What follows is the Committee’s analysis of Section 230, its origins, and how digital platforms have used it far beyond its original scope, as well as the Committee’s proposals on how to amend it to improve the accountability of digital platforms, reduce the unfair subsidy they receive through the legal shield provided to them by Section 230, and improve the quality of the public debate without limiting freedom of speech.

The Origin of Section 230

Section 230 was enacted as part of the Telecommunications Act of 1996 to govern internet service providers (ISPs). The ISP to the ordinary publisher in 1996 was something like the scooter to automobiles today: a useful invention, but one hardly on the verge of dominance.

Tarleton Gillespie writes: “At the time Section 230 was crafted, few social media platforms existed. US lawmakers were regulating a web largely populated by ISPs and web ‘publishers’—amateurs posting personal pages, companies designing stand-alone websites, and online communities having discussions.”

Although sometimes viewed as a sweeping libertarian intervention, Section 230 actually began life as a smut-busting provision: an amendment for the “Protection for Private Blocking and Screening of Offensive Material.” Its purpose was to allow and encourage internet service providers to create safe spaces, free of pornography, for children.

The goals at the time of adoption were (1) to give new “interactive computer services” breathing room to develop without lawsuits “to promote the continued development of the Internet,” while (2) also encouraging them to filter out harmful content without fear of getting into trouble for under- or over-filtering. Thus, Section 230 is both a shield to protect speech and innovation and a sword to attack speech abuses on platforms.

The shield part is embodied in Section 230(c)(1): “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” This is not blanket immunity for the distribution of content, and indeed platforms are still liable for their own content, and for federal crimes and copyright violations related to third-party content. The immunity is really limited to the speech-related harms that publishers ordinarily face such as defamation and intentional infliction of emotional distress. In other words, a platform like Facebook remains liable for distributing child pornography, which is federal criminal content. It also remains liable for Facebook-authored defamatory content.

Facebook cannot, however, be held secondarily liable for defamatory content posted by its users.

This was designed to avoid the paradoxical situation in which an intermediary tries to moderate its platform to reduce harmful content, but then is subject to liability because it has exercised editorial control.

Section 230 is sometimes characterized as a “get out of jail free card” for platforms. According to Chesney and Citron, “Section 230 has evolved into a kind of super-immunity that, among other things, prevents the civil liability system from incentivizing the best-positioned entities to take action against the most harmful content.”

To be sure, courts have extended immunity in situations that almost certainly go beyond what Congress originally intended. For example, platforms have been excused from transmitting otherwise illegal content even when they have solicited and have clear knowledge of that content. Moreover, platforms that do not function as publishers or distributors (e.g., Airbnb) have also invoked Section 230 to relieve them of liability that has nothing to do with free speech.

The Stigler Center Report Proposal: Section 230 as a Quid Pro Quo Benefit

For reasons having to do with risk reduction and harm prevention, legislators may well amend Section 230 to raise the standard of care that platforms take and increase exposure to tort and criminal liability. Since our focus is on improving the conditions for the production, distribution, and consumption of responsible journalism, we have a different kind of proposal.

We look at Section 230 as a speech subsidy that ought to be conditioned on public interest requirements, at least for the largest intermediaries who benefit most and need it least. It is a speech subsidy not altogether different from the provision of spectrum licenses to broadcasters or rights of way to cable providers or orbital slots to satellite operators.

The public and news producers pay for this subsidy. The public foregoes legal recourse against platforms and otherwise sustains the costs of harmful speech. News producers bear the risk of actionable speech, while at the same time losing advertising revenue to the platforms freed of that risk. Media entities have to spend significant resources to avoid legal exposure, including by instituting fact-checking and editing procedures and by defending against lawsuits. These lawsuits can be fatal, as in the case of Gawker Media. More commonly, they face the threat of “death by ten thousand duck-bites” of lawsuits even if those suits are ultimately meritless.

https://t.co/yE7pAOS19e to end operations next week: https://t.co/LnvG0ZmD9I

— Gawker (@Gawker) August 18, 2016

The monetary value of Section 230 to platforms is substantial, if unquantifiable. Section 230 subsidies for the largest intermediaries should be conditioned on the fulfillment of public interest obligations.

We address transparency and data sharing requirements in this report that should apply generally to the companies within scope. However, there may be additional requirements that a regulator would not want to, or could not, impose across the board. For example, the UK has proposed to require platform companies to ensure that their algorithms do not skew towards extreme and unreliable material to boost user engagement.

We would not recommend such a regulation, but it might be appropriate to condition Section 230 immunity on such a commitment for the largest intermediaries. We believe that Section 230 immunity for the largest intermediaries should be premised on requirements that are well-developed in media and telecoms law:

Transparency obligations: Whichever of these requirements did not become part of a mandatory regulatory regime could be made a condition of Section 230 immunity. Platforms should give a regulator and/or the public data on what content is being promoted to whom, data on the process and policies of content moderation, and data on advertising practices.

These obligations would go some way towards replicating what already exists in the off-platform media environment by virtue of custom and law. Newspaper mastheads, voluntary codes of standards and practices, use of ombudsmen, standardized circulation metrics, and publicly traceable versioning all provide some level of transparency that platforms lack. For broadcasters, there are reporting and public file requirements especially with respect to political advertising and children’s programming.

Subsidy obligations: Platforms should be required to pay a certain percentage of gross revenue to support a voucher system to support traditional newspapers. In the US, telecom providers have been required to pay into a Universal Service fund in order to advance public interest goals of connectivity. Public media has not been funded by commercial broadcasters.

However, there was once a serious proposal at the advent of digital broadcast television for commercial broadcasters to “pay or play”—that is, to allow them to serve the public interest by subsidizing others to create programming in lieu of doing it themselves.

The nexus between the Section 230 benefit and the journalism subsidy is essentially this: Section 230 unavoidably allows many discourse harms and “noise” in the information environment. A subsidy for more quality “signal” goes some distance towards compensating for these harms.

It is important to stress that any tax that would be levied on the platforms has to go to the general budget and not be tied in any way to the voucher scheme proposed here—to prevent a situation whereby news outlets have an incentive to lobby directly or indirectly through their editorial agenda to protect the power and revenues of the platforms.

The ProMarket blog is dedicated to discussing how competition tends to be subverted by special interests. The posts represent the opinions of their writers, not necessarily those of the University of Chicago, the Booth School of Business, or its faculty. For more information, please visit ProMarket Blog Policy.