Social media platforms have moderated user-generated content since their inception. Economics helps us understand why they do it and how to regulate them.

Social media platforms remove posts and ban users to moderate their content. This practice is again in the spotlight with Elon Musk’s Twitter acquisition, but platforms have self-regulated for decades. Already in 2003, Friendster’s Terms of Service forbid promoting hatred or misleading or false information.

The widespread and persistent nature of content moderation suggests that it is an intrinsic characteristic of what platforms do. In a recent paper, I argue that a useful framework to think about this practice is to treat social media platforms as profit-maximizing firms that regard content moderation as just another business decision. Guided by economic theory, I conducted two field experiments with actual Twitter data and users.

Profit-maximizing companies moderate to increase user engagement with ads

Even if social media companies have algorithms that detect rule violations, they still need to hire or subcontract thousands of humans to review content manually and enforce their rules. Because moderation at a large scale is resource-intensive, it makes sense to increase the strictness of a platform’s moderation policy only until the additional costs equal the additional benefits.

One potential benefit is to prevent future regulation. Currently, US regulation titled ‘section 230’ shields digital platforms from legal liability regarding what is posted on their websites. Twitter employees have been quoted saying that platforms have no incentive to keep content up because of regulatory concerns. However, it is unlikely that the potential benefit of staving off regulators alone can cover the costs involved in content moderation. Thus, this is not likely to be the main reason for social media firms to moderate content. First, social media started moderating well before any regulatory threat, often with a scope that goes beyond existing laws. Second, even once regulation is in place, the actual monetary cost for platforms is minimal—Facebook has only paid 2 million Euros in fines for violating Germany’s anti-hate speech law (NetzDG).

Another benefit often mentioned is a potential increase in advertiser demand in response to improved brand safety. But it turns out that moderation is not essential for this purpose, as platforms can effectively regulate the prevalence of toxic content and improve brand safety by carefully optimizing their advertising loads.

Therefore, at least theoretically, the most important benefit that social media companies get from improving their content moderation is an increase in user engagement, and hence, advertising revenue. In practice, we should see that small increases in moderation lead to a higher engagement for at least some users—for example, those that are attacked or offended by hate speech or dislike misinformation. Additionally, we should expect that moderation is more likely to occur in cases where those who benefit from it increase their engagement substantially, while those who are harmed do not decrease their engagement too much.

I tested this intuition with data by measuring the effects of moderating hate speech on user behavior. To do so, I experimented with Twitter’s reporting tool that allows users to report content that violates the platform rules. I sampled 6,000 tweets containing hate speech and randomly reported half of them. This strategy induced Twitter to moderate the reported users at a higher rate than the others who I did not report—by deleting their tweets and, likely, locking their accounts.

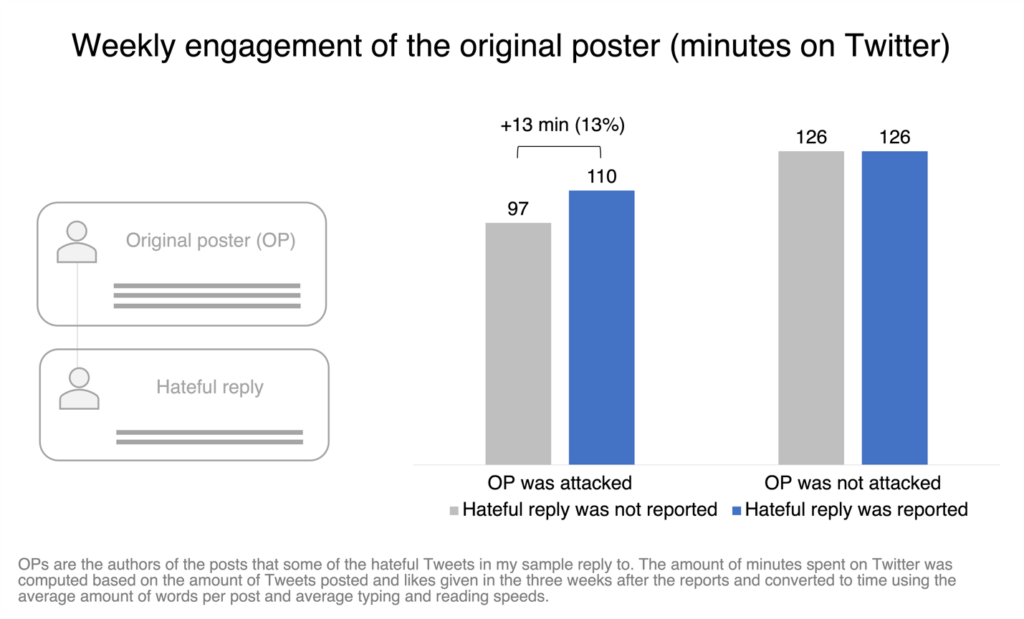

The chart below shows that, indeed, reporting hateful content can increase the engagement of users. It provides evidence from a subsample of hateful tweets that replied to other users—often attacking them. Reporting hateful replies increased the time that the attacked users spent on the platform by 13 minutes per week (a statistically significant 13% increase on average).

Besides this effect, I found no evidence that the reports changed the engagement or toxicity of the authors of the hateful posts or their network. Hence, it seems that the reporting experiment resulted in a net increase in users’ time spent on the platform.

While experimental or quasi-experimental evidence such as this is scarce, more recent papers provide results supporting parts of the theory. For example, studies evaluating content moderation policies on Twitter and Facebook also find a null or a very transitory effect on the engagement of moderated users, meaning that moderating inappropriate posts does not have a negative impact on the platform. This evidence contrasts with most of the prior research using observational data and non-causal evidence that found that moderated users decrease their engagement. This contrast highlights the importance of conducting more causal studies in this vein.

Without data, it is not obvious whether platforms moderate too little or too much

Under an advertising-driven business model, profit-maximizing platforms choose the strictness of their moderation policies to maximize their advertising revenue net of costs. However, platforms face an inevitable trade-off: they must strike a balance between increasing engagement from those who like moderation and not angering too much those who dislike it.

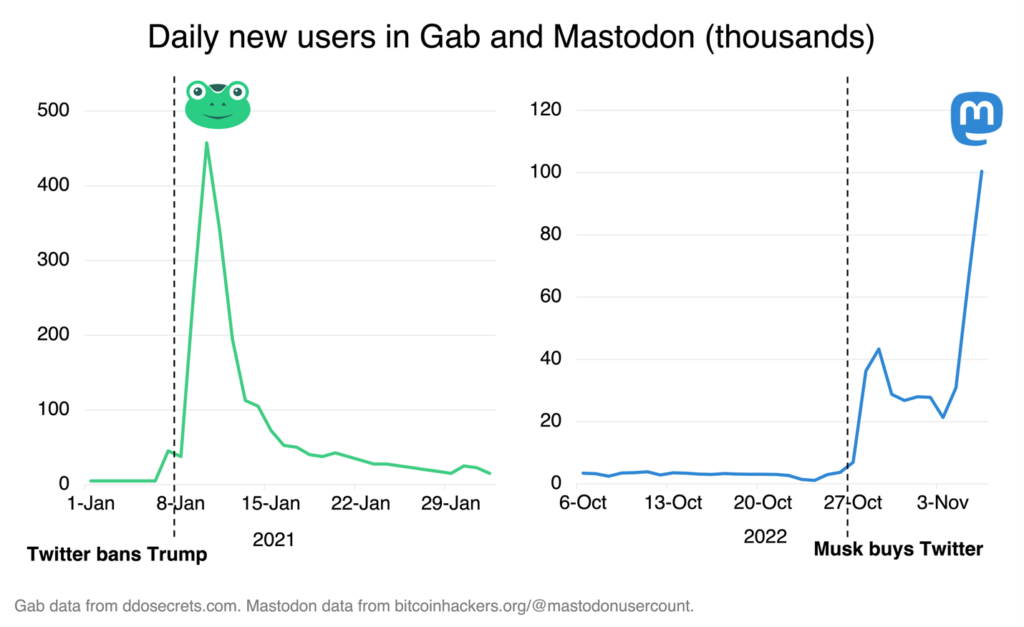

Twitter’s recent experience offers an example of this trade-off. The chart below shows that the daily new users of Gab—a platform known for its far-right user base—skyrocketed around the time that social media companies suspended former US president Donald Trump. More recently, concerns that Elon Musk’s Twitter acquisition will relax its moderation practices triggered a migration to Mastodon—a social platform that is considered to be a safer, decentralized alternative. In both cases, it appears that changes in the perceived strictness of content moderation policies (in opposite directions) led some users to search for alternative platforms.

Even purely profit-maximizing companies have incentives to self-regulate and to balance the often-opposing interests of their customer base. Yet, the relevant question is whether profit-driven incentives are the right ones: those that generate the largest possible social surplus.

Economics helps answer this question because sanitizing social media resembles the problem of producers when they can adjust not only the quantity of products they sell but also their quality. In other words, content moderation is a quality decision for platforms. Seminal work has shown that producers set the quality of their products to attract new customers, so their product quality can be too high or too low for their existing customers. Hence, without data, it is not obvious whether Twitter moderates too much or too little, from the perspective of the benefit its users get.

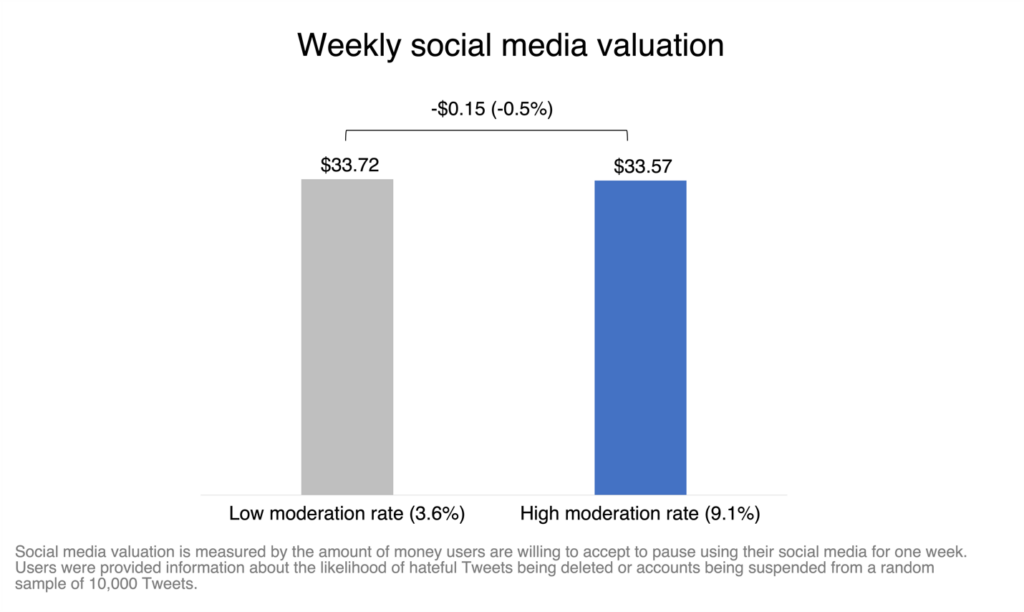

To settle this question with data, I ran a survey experiment with 3,000 Twitter users. I randomly gave half of them information about a low rate of moderation on Twitter, and the other half received information about a higher rate. The chart below shows the results: expecting a stronger content moderation policy does not increase the value its users get from social media. In monetary terms, both groups value the experience at about $33 per week. This result suggests that—all else constant—Twitter is neither over-moderating nor under-moderating hateful content.

Of course, the benefits to Twitter users are not the whole story. The debate about content moderation often centers on real-world outcomes, such as violence and democratic participation. However, without empirical evidence, it is again not obvious whether moderation results in net benefits or societal costs. For example, on the one hand, YouTube’s moderation algorithms erased documentation of human rights violations during Syria’s Civil war. On the other hand, there is evidence that stronger content moderation induced by Germany’s NetzDG law helped reduce hate crimes in localities more exposed to hate speech.

Ad loads are the new prices

A major obstacle for regulators is the prevalence of zero (monetary) prices in digital markets. But because social media users “pay” with their attention and data, the relevant price for them is the advertising load—or the frequency in which they see ads in their feeds. Like a regular price, a higher ad load increases platform revenue per hour of user engagement, but it decreases users’ willingness to engage with the platform. In fact, there is nascent literature documenting how users of digital services respond to advertising loads.

Since platforms can easily customize the ad load displayed to different users, they can also control their engagement without the need for moderation. Theoretically, a platform could achieve the same prevalence of hate speech with either a low or a high moderation rate. It could do so by pairing the high moderation rate with a “discount” in the ad loads of users who tend to post hate speech.

In other words, the observed prevalence of hate speech and misinformation is a result not only of platforms’ moderation policy but also of their advertising policy—and other variables such as their algorithmic curation. Even if my results rule out significant inefficiencies in Twitter’s moderation policy, there is no data to illuminate how far their advertising policy is from being socially optimal. Governments scrutinize the removal of toxic content but have paid little attention to ad load policies.

There are widespread misperceptions about moderation

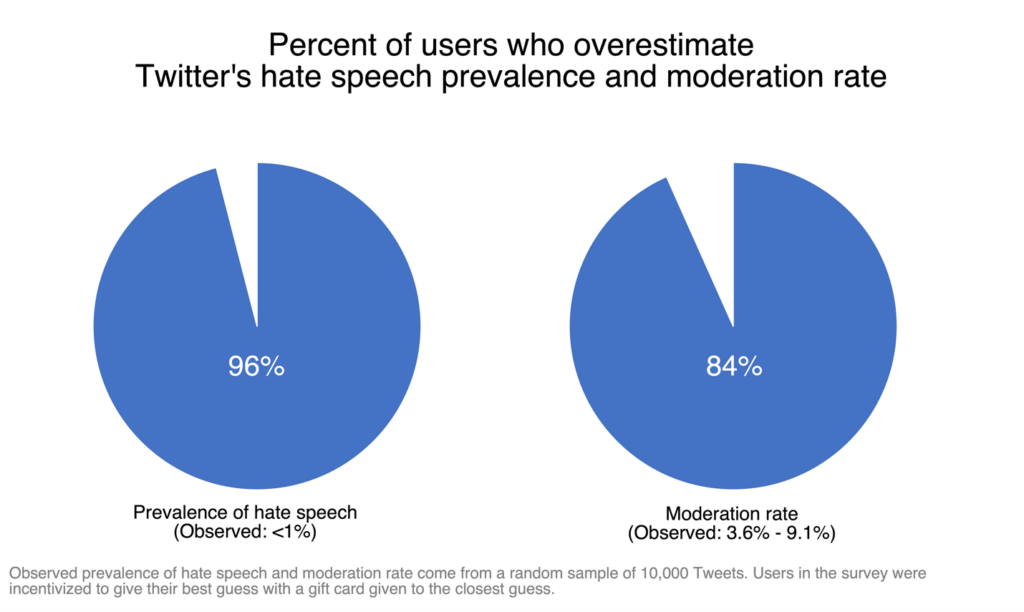

Lastly, content moderation is widely misperceived, partly due to the scarcity of information about it. The chart below shows that nearly all users in my survey overestimate the prevalence of hate speech and the likelihood of moderation on Twitter. Platforms have been more transparent recently, but, for example, there was no official data about the prevalence of rule violations on Twitter—less than 0.1% of all impressions—until July 2021. The likelihood of moderation remained a mystery, until the Facebook Files leaked a 3%-5% rate.

Obtaining independent, experimental data about user behavior on social media is feasible. When paired with simple economics, the data improves our understanding of why platforms self-regulate, how users respond to moderation, and where to focus cost-benefit analyses. Nonetheless, policy-related questions remain, such as the real-world effect of moderation and the behavioral impacts of platforms’ advertising policies.

Read more about our disclosure policy here.